Thanks to the generous support of our sponsors and partners, members of the Supercomputing Laboratory have access to the following high performance computing resources:

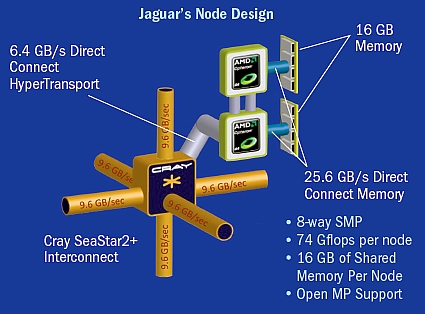

| Jaguar at Oak Ridge National Laboratory (Jaguar has been ranked No. 1 in the 34th TOP500 Supercomputer List) |

|

-

224,256 cores in 18,688 nodes

-

Each node has two Opteron 2435 "Istanbul" processors linked with dual HyperTransport connections

-

Each processor has six cores with a clock rate of 2600 MHz supporting 4 floating-point operations per clock period per core

-

299 TB of memory

-

Each node is a dual-socket, twelve-core node with 16 gigabytes of shared memory

-

Each processor has directly attached 8 gigabytes of DDR2-800 memory

-

2331 Teraflops (1759 Teraflops on Linkpack)

-

Each node has a peak processing performance of 124.8 gigaflops

-

Each core has a peak processing performance of 10.4 gigaflops

-

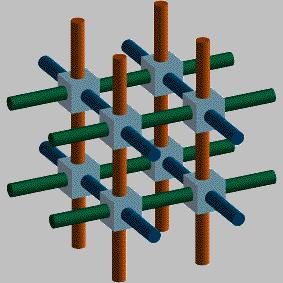

3D torus interconnection network

|

|

| Kraken at the University of Tennessee (Kraken has been ranked No. 3 in the 34th TOP500 Supercomputer List) |

|

-

99,072 cores in 8,256 nodes

-

Each node has two Opteron 2435 "Istanbul" processors linked with dual HyperTransport connections

-

Each processor has six cores with a clock rate of 2600 MHz supporting 4 floating-point operations per clock period per core

-

129 TB of memory

-

Each node is a dual-socket, twelve-core node with 16 gigabytes of shared memory

-

Each processor has directly attached 8 gigabytes of DDR2-800 memory

-

1028.85 Teraflops (831.7 Teraflops on Linkpack)

-

Each node has a peak processing performance of 124.8 gigaflops

-

Each core has a peak processing performance of 10.4 gigaflops

-

3D torus interconnection network

|

| Ranger at the University of Texas (Ranger has been ranked No. 9 in the 34th TOP500 Supercomputer List) |

|

-

62,976 cores in 3,936 nodes

-

Each node has four AMD Opteron Quad-Core 64-bit processors (16 cores in all) on a single board, as an SMP unit

-

Each processor has four cores with a clock rate of 2300 MHz supporting 4 floating-point operations per clock period per core

-

123 TB of memory

-

Each node is a 16-core SMP uint with 32 GB of memory

-

579 Teraflops (433.2 Teraflops on Linkpack)

-

Each node has a peak processing performance of 147.2 gigaflops

-

Each core has a peak processing performance of 9.2 gigaflops

-

The interconnect topology is a 7-stage, full-CLOS fat tree with two large Sun InfiniBand Datacenter switches at the core of the fabric (each switch can support up to a maximum of 3,456 SDR InfiniBand ports)

|

|

| Ra at Colorado School of Mines (Ra has been ranked No. 134 in the 31st TOP500 Supercomputer List) |

|

-

2,144 cores in 268 nodes

-

256 nodes with two 512 Clovertown E5355 quad-core processor at a clock rate of 2670 MHz supporting 4 floating-point operations per clock period per core

-

12 nodes with four 48 Xeon 7140M dual-core processor at a clock rate of 3400 MHz supporting 4 floating-point operations per clock period per core

-

5.6 TB of memory

-

16 GB memory per node for 184 nodes

-

32 GB memory per node for 72 nodes

-

23.2 Teraflops (17 Teraflops on Linkpack)

-

Clovertown node has a peak processing performance of 85.44 gigaflops

-

Xeon node has a peak processing performance of 108.8 gigaflops

-

Cisco SFS 7024 IB Server Switch

|

| Alamode Lab at Colorado School of Mines |

|

-

94 cores in 24 nodes

-

Each node has two dual-core AMD Opteron 2218 processors at a clock rate of 2600 MHz supporting 2 floating-point operations per clock period per core

-

192 GB of memory

-

Each node has 8 gigabytes of memory

-

488.8 Gigaflops peak performance

-

Each core has a peak processing performance of 5.2 gigaflops

-

Gigabit Ethernet Switch

|

|

| Microsoft Donated Cluster (MSC) at Colorado School of Mines |

|

-

32 cores in 16 nodes

-

Each node has a dual-core AMD Opteron 2212 processor at a clock rate of 2000 MHz supporting 2 floating-point operations per clock period per core

-

128 GB of memory

-

Each node has 8 gigabytes of memory

-

128 Gigaflops peak performance

-

Each core has a peak processing performance of 4 gigaflops

-

Gigabit Ethernet Switch

|

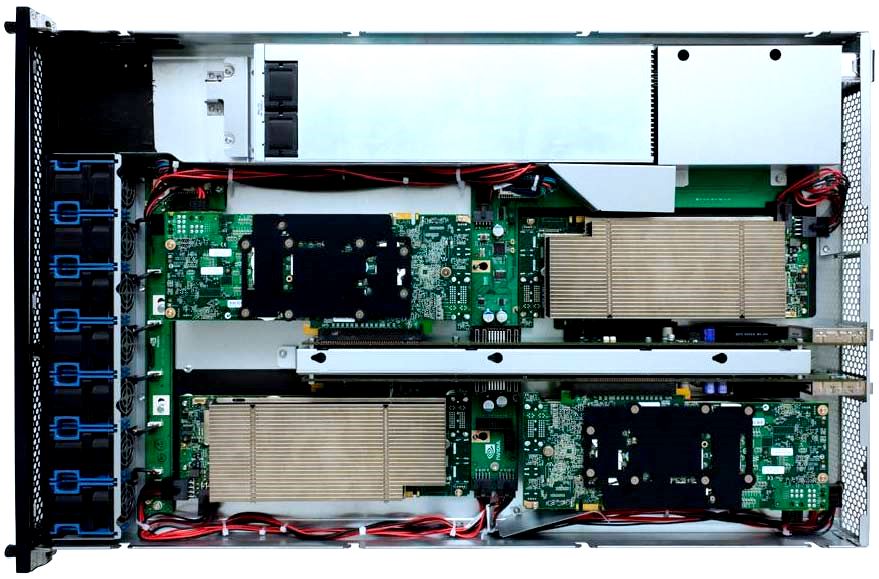

| Nvidia Tesla S1070 system cuda1.mines.edu at Colorado School of Mines |

|

-

This 1U rack mountable system contains 4 of the Nvidia Quadro FX 5600 GPU cards and has a peak computing performance of 4 trillion floating point operations per second, or 4 Teraflops.

-

Each of the 4 graphics processing units (GPU) on the Tesla has 240 processing cores and 4 Gbytes of memory for a total of 960 cores and 16 Gbytes.

-

The individual GPUs are connected to the front-end node via a PCI connector.

|

|